So the project has come to an end… but what have we achieved?

Our final objective was to get the Pi/Roomba/Ros/Laser combination set-up and working, such that the Robot can move around an environment and avoid obstacles. As a further goal, we aimed to also have the robot moving around a room, map it out and then navigate around it. Most of this has been complete, apart from the part which enables the robot to navigate around a self-created map and tell the robot to move to certain locations in the map.

Infact, by the end of this week, we managed to finish all of our initial goals that we set out to complete in the first day of the project. Along the way, a lot of changes have been made as to how we achieve this goal (e.g. Using laser instead of Kinect) but nevertheless, we managed to achieve it. Furthermore, most of the last week was spent working on implementing the navigation stack into the project and the first goal was to get the robot mapping out the room. By the end of the week we managed this after a lot of complications! Simply understanding how the navigation stack works was a feat in itself, due to the vast amount of documentation and files that need to be set-up to do with transformation trees. After some guidance from our supervisor, we managed to fix a lot of problems and discovered that the root of a big problem was due to the fact that the time and date set on the Raspberry Pi was not accurate, which resulted in any recorded ROS messages becoming un-usable. Combining this understanding with our current obstacle avoiding package, we were able to create a number of maps, two of which are show below!

This first one was quite simply created by setting up a small square(ish) enclosure in which the robot was placed. After recording it moving around for a short period of time, the rosbag file was played back into the gmapping stack which uses SLAM and thus the map was created. While the map was being created, it was impressive to watch it all play back in RViz, which is something that could quite easily be shown as a demonstration and/or visualization when shown at Open Days etc.

This second map is our attempt at mapping an entire room, which didn’t exactly turn out perfectly and has some notable faults. The section in the center where no map has been created is an area where obstacles are located, however they do not appear as they should. This is most likely occurring due to the amount of rotations which the program used to map the area, which as a result meant that the odometry was very in-accurate. This could be greatly improved by simply improving the program to rotate less often or slower. Unfortunately, we did not managed to implement our ultimate goal, that included a system which allows the user to specify points in the map, which would then allow the robot to autonomously navigate to that located. From inspection, it would not be hard to include this, due to the fact that the navigation stack within ROS handles a lot of this by itself.

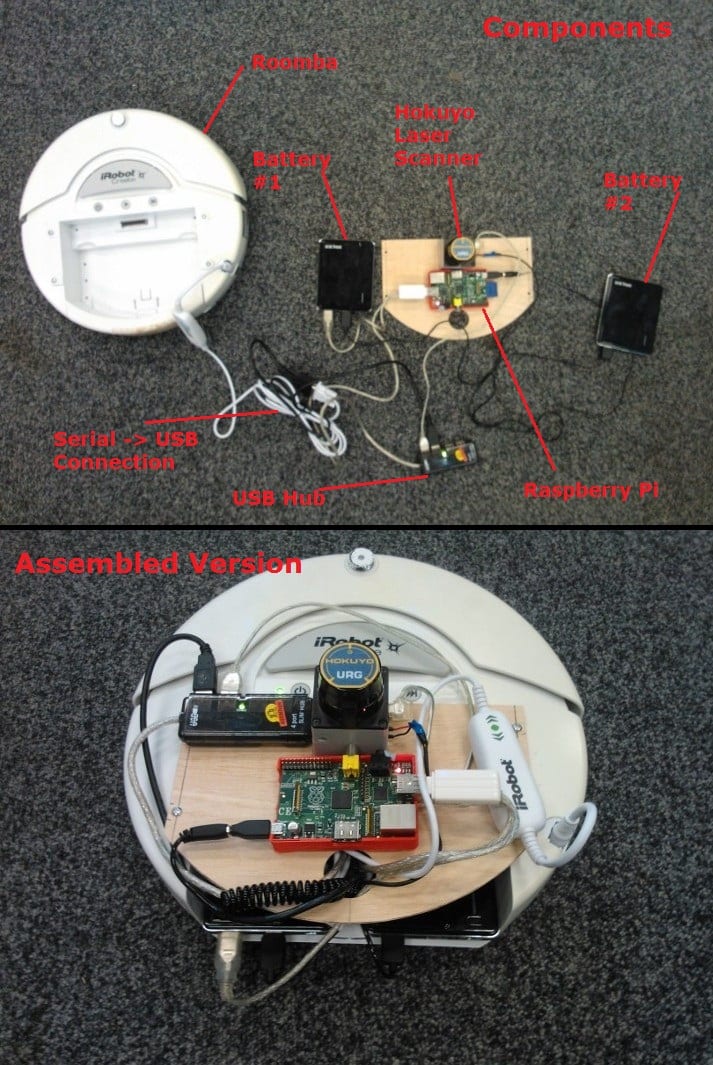

In terms of the actual robot set-up itself, the final product looks something like the following!

The top half of the image shows the guts of the robot, which also highlights the set-up used during the project. Due to the fact that we have a lot of components which require a lot of power, two battery packs were needed to ensure that constant and sufficient power was being pumped into the system. It is possible to power it just from one battery pack, however it then becomes unreliable. At the moment, this is quite a hacked up and janky set-up, however it does the job and the aesthetics can be sorted later ;-). A lot of thought went into the set-up of this robot and various different set-ups have been tried and tested.

Now that the project is over, the whole team is happy that we managed to achieve a lot in just three weeks and have genuinely enjoyed the project throughout. A lot of times have certainly been frustrating (most commonly due to the Pi causing problems!) but the team managed to work through and complete everything in a timely fashion. We hope we have managed to set a strong foundation for some other budding ROS-users to come along and continue working on this project!